Why 8 Transceivers Per Port?

Wednesday October 7, 2020By Mark Baumann

Director, Product Definition & Applications

MoSys, Inc.

The question can be asked as to why does the MoSys Accelerator Engines have 8 SerDes Transceivers per GCI port?

The fundamental question is what is the bandwidth of the device that is being accessed. In this case, the MoSys Accelerator Engines. The goal of the device is to utilize SerDes lanes to interface to the device, which has the result of minimizing the number of pins. For example, with a standard QDR type memory with LVCMOS interface signaling, it requires more than 100 pins to service the interface. That is a significant number of pins to dedicate to one device and presents a significant board routing challenge. Running large buses at hundreds of Mega-hertz and tight trace to trace skew requirements is time consuming and eats up valuable board space routing.

The strategy that MoSys has followed is to utilize SerDes lanes (that are becoming more abundant on FPGAs and ASICs) which provide high density and high bandwidth while utilizing a minimum number of pins and the routing restrictions on SerDes pins is much less restrictive than that of high speed single ended signaling. In addition, there is a clear roadmap for continued speed advances with SerDes where, in this writers opinion, we are quickly approaching the practical lifespan of single-ended signaling of large buses on PCBs.

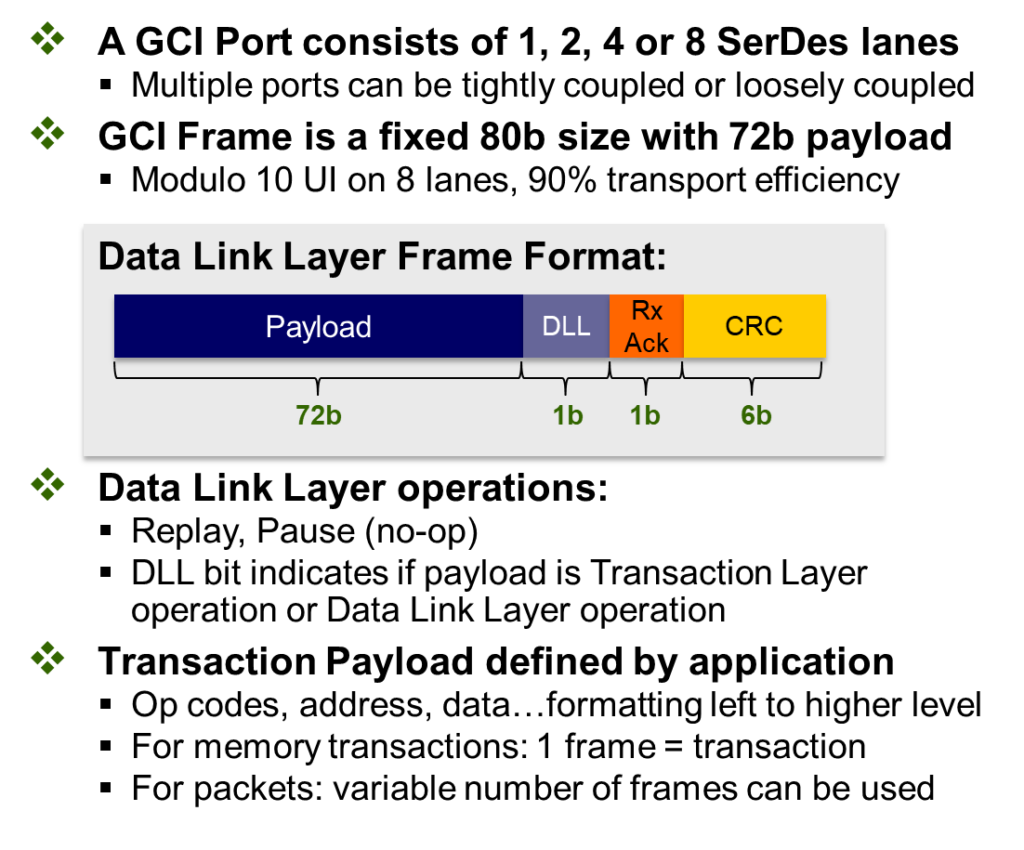

Up to this point, we have not answered the question as to why 8 transceivers. To answer that we need to look at the frame or packet of data to be transferred across the link. In the case of the MoSys Accelerator Engines we have defined an 80 bit frame. Within the frame 72 bits are:

Defined to hold data (either pure data or Address and command), as seen in the above figure and the remaining 8 bits (or 10% of the frame) hold the overhead needed to ensure that the frame is transported reliably across the link.

In the realm of SerDes protocols, this is a small overhead and has been defined and designed by MoSys to minimize the burden when dealing with a connection that only needs to be concerned with a point-to-point transfer. When this interface was designed, it was understood that the access was to a memory and that would require high speed and low latency. Since most SerDes protocols are designed for large packet transfers, it can support the larger overhead of many bytes of data per transfer. However, if the goal is to transfer just 1 to 8 words of data at a time, to carry the extra bits or bytes that a packet protocol carries would be impactful on the overall interface performance. This is the reason that a one-word transfer has as few as 8 bits of overhead in the MoSys GCI protocol.

Looking back at the transfer we have now defined that a Frame is 80 bits and high bandwidth is important, because we are dealing with the speed of memory accesses. This can impact the throughput of the whole system if memory is too slow. This has helped define the lanes of SerDes as well. If we look at the slowest offering which is 10Gbps SerDes, when using 8 lanes we can transfer a frame in one nanosecond 80 bits across 8 lanes is 10 bits per lane and at 10Gbps that takes 1 ns. So, if the memory is capable of receiving a new frame every ns, which the MoSys Accelerator Engines are capable of, the throughput will result in 1 Billion access a second at 10Gbps SerDes. This can now scale with the SerDes rate, at 12.5Gbps SerDes the throughput will be 1.25 Billion Accesses a second and so on. MoSys presently offers a device with 25Gbps SerDes that results in 2.5Billion accesses a second per 8 lane port.

A secondary benefit of using SerDes, is that if desired, it is possible to use less SerDes lanes and tune the required throughput. So, if your FPGA only has 4 SerDes lanes available for use it is possible to utilize this and the result will be half the throughput of an 8-lane implementation. With the MoSys Accelerator Engines, each device has two ports (or 16 SerDes lanes) which also allow for double the throughput of an 8 lane implementation.

To summarize, the reason for the 8 lanes is to allow for an efficient method of interfacing with a minimal number of SerDes lanes. It supports very efficient transfer of the 80-bit frames and allows for a performance tuning by utilizing the available SerDes links.

A secondary benefit is the fact that a SerDes roadmap that is active and aggressively being developed will also help drive future improvements in bandwidth when utilizing SerDes as an interface standard.

Additional Resources:

If you are looking for more technical information or need to discuss your technical challenges with an expert, we are happy to help. Email us and we will arrange to have one of our technical specialists speak with you. You can also sign up for updates. Finally, please follow us on social media so we can keep in touch.