An Overview of Graph Memory Engine Technology – Part I of 3

Tuesday May 26, 2020By Michael Miller

Chief Technology Officer

MoSys, Inc.

MoSys has developed Application-Specific Accelerator Platforms for growing applications that require functions such as packet filtering, packet forwarding and data analytics. Each platform includes an application-specific Virtual Accelerator Engine and software for programing the engine. The software works as an adaption layer between new and existing software environments and the Virtual Accelerated Engines. The Graph Memory Engine (GME) represents a specific memory of the family of Virtual Accelerator Engines developed to accelerate the processing of data structures. This can be represented as graphs. Part 1 of this blog will focus on the execution of GME technology. Part 2 will feature API and GME implementations. And Part 3 will cover RTL, GME interface and platform details.

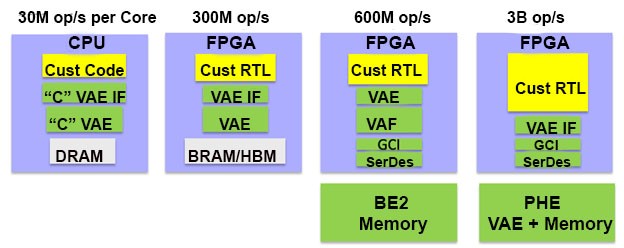

MoSys Virtual Accelerator Engines (VAE) are a range of implementations of the same accelerator function with a common RTL Interface (VAE IF), employing a common application program interface (API). THE VAE allows for platform solutions portability in order to achieve a range of performance scaling options up to 100x. Implementations can range from software on a CPU, to modules in FPGAs, to very high-performance requirementswhich combine an FPGA connected to MoSys silicon hardware accelerators with in-memory compute functions.

There are several key attributes at the core of the MoSys VAE value proposition:

- A single Virtual Architecture

- Single API

- Single RTL Module Interface

- Adaption Layer software

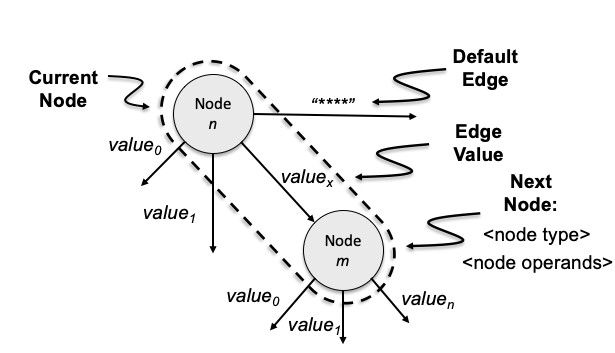

The GME processes input vectors based upon graphs composed of nodes and edges, wherein the nodes represent a state and the edges specify how to transfer to a new state (node) based upon edge values which are the result of a computation or test. The GME can be thought of as a more sophisticated State Machine.

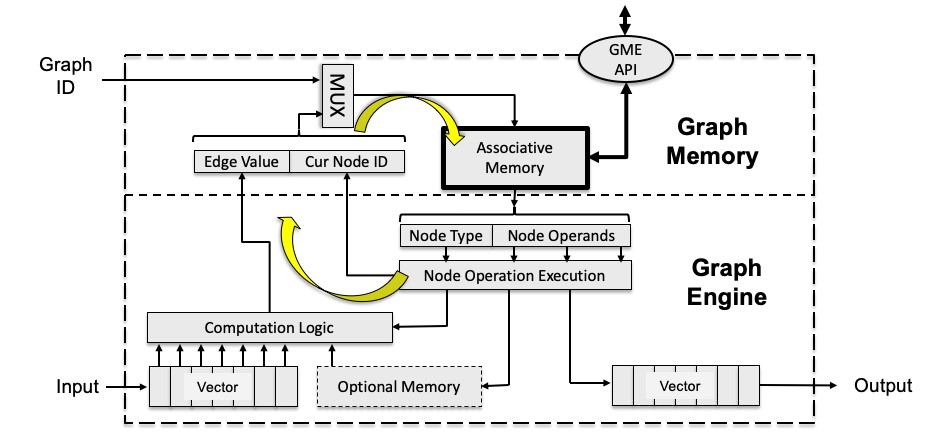

The GME can be further broken down into a Graph Memory and a Graph Engine where the engine may be further specialized for specific applications. The Graph Engine is a generalized function for moving about the graph. The Graph Engine fetches edges from the Graph Memory (GM) typically is an associative memory which is organized as Key & Value pairs.

Graph execution starts with an initial node specific to each graph (Graph ID) which is fetched from the GM. Each node has an ID, Node Type and Node Operands which specifies operations to be performed on the input vector or internal stored context. The operation produces an edge value. Example operations might be as simple as selecting some bits from the input vector to produce a new edge value, or the operation might be compared to some selected bit with a range of values or as complex as linear algebra calculation as part of a Support Vector Machine (SVM). If an edge value is not found, then the default edge is used.

The node type and operands can specify operations that are applied to memory such as local thread context, global context that hold state between graph executions and the resulting vector which is returned when a graph terminates with a leaf node.

The GME API is the interface through which higher level configures and manages the GME. For applications like Packet Classifiers, this can be thought of as the Control Plane stack interface. All updates to the GM are done as Key/Value pair updates. This maps easily into libraries for languages like Python, C++ or “C”. For GME, there is a common API that is defined that works with all GME implementations.

Additional Resources:

Part 1 of this blog focused on the execution of GME technology. Part 2 will feature API and GME implementations. And Part 3 will cover RLT, GME interface and platform details. If you are looking for more technical information or need to discuss your technical challenges with an expert, we are happy to help. Email us and we will arrange to have one of our technical specialists speak with you. You can also sign up for updates. Finally, please follow us on social media so we can keep in touch.